📱 Embedding LLMs Inside Mobile Apps

Mobile apps are no longer just interfaces for consuming content or completing tasks. In 2026, the most successful apps are becoming intelligent companions — capable of understanding users, reasoning over context, and responding in natural language. At the center of this transformation is the rise of Large Language Models (LLMs) embedded directly into mobile applications.

From AI tutors and fitness coaches to productivity assistants and customer-support copilots, LLM-powered mobile apps are redefining user experience. This blog explores what it really means to embed LLMs inside mobile apps, how it works, and why it’s becoming a must-have capability.

🚀 Why Mobile Apps Are Going AI-Native

Mobile apps are evolving from static tools into intelligent, adaptive experiences because smartphones are no longer just devices — they are deeply personal extensions of our daily lives. They travel with us everywhere, understand our routines, store our conversations, track our preferences, and reflect our behavior over time. When Large Language Models (LLMs) are embedded into mobile apps, this rich personal context can finally be transformed into meaningful intelligence. AI-native apps don’t just respond to clicks; they understand intent, anticipate needs, and adapt in real time. This shift is happening because traditional rule-based logic cannot keep up with the complexity, variability, and personalization modern users expect. AI fills that gap by enabling apps to reason, learn, and communicate naturally.

In AI-native mobile apps, interaction moves away from rigid UI flows toward conversational and contextual experiences. Users no longer need to navigate endless screens or memorize where features live. Instead, they can simply ask questions, describe goals, or speak naturally — and the app figures out what to do. Workflows that once required multiple steps can now be handled in a single interaction. Over time, the app learns how the user thinks, what they prefer, and how they typically behave, making each interaction faster and more relevant than the last. This dynamic adaptability is impossible to achieve with traditional app architectures.

From a business perspective, AI-native design fundamentally changes engagement and value creation. Apps that understand users at a deeper level keep them active longer, reduce churn, and create stronger emotional connections. Instead of being opened only when needed, AI-powered apps become companions that users rely on daily. This opens the door to premium experiences such as personalized coaching, intelligent recommendations, proactive alerts, and automated decision support — all of which translate directly into new monetization opportunities. For businesses, AI-native apps are not just a feature upgrade; they are a strategic advantage in a crowded app ecosystem.

For users, the impact is even more tangible. AI-native mobile apps feel less like software and more like helpful assistants that listen, explain, guide, and support. They reduce cognitive load by handling complexity in the background, saving time and mental energy. Whether it’s learning something new, managing health, shopping smarter, or staying productive, users experience smoother, more human-centered interactions. As expectations rise, apps that fail to adopt AI-native principles risk feeling outdated, slow, and impersonal.

Key reasons mobile apps are becoming AI-native:

- Deep personalization using real user context, not generic rules

- Natural language interaction instead of complex UI navigation

- Dynamic workflows that adapt to changing user needs

- Higher user engagement and long-term retention

- New revenue models powered by intelligent features

- Reduced friction and improved user satisfaction

- Competitive differentiation in saturated app markets

In short, mobile apps are going AI-native because users no longer want tools that simply function — they want apps that understand them. AI is becoming the core layer that turns mobile software into intelligent, responsive, and truly personal experiences.

🧠 What Does “Embedding an LLM” Really Mean?

Embedding an LLM inside a mobile app does not usually mean squeezing a massive language model directly onto a phone and running it fully offline. In practice, it means designing the app so that LLM intelligence becomes a foundational layer of the product, rather than an optional add-on feature. The mobile app acts as the user-facing interface, while the LLM operates as the reasoning, understanding, and decision-making engine behind the scenes. This intelligence can be delivered through secure cloud APIs, lightweight on-device models, edge inference, or hybrid architectures that balance performance, privacy, and cost. The goal is not where the model runs, but how deeply it is integrated into the user experience.

When an LLM is embedded properly, it reshapes how the app behaves. Instead of responding only to predefined inputs, the app can interpret natural language, understand intent, reason over context, and generate meaningful outputs dynamically. Features like chat-based interaction, smart recommendations, content summarization, task automation, and decision support become native capabilities rather than bolted-on tools. The LLM continuously interprets user actions, preferences, and historical context, allowing the app to evolve alongside the user. This creates experiences that feel adaptive, conversational, and intelligent rather than static and transactional.

Technically, embedding an LLM also means connecting it with the app’s internal state and external systems. The model is often paired with user data, device signals, app context, and backend services to produce relevant responses. For example, a fitness app doesn’t just answer generic health questions — it reasons using the user’s activity data, goals, schedule, and past behavior. A finance app doesn’t simply explain budgeting — it analyzes transactions, detects patterns, and offers personalized insights. The LLM becomes a coordinator that understands data, asks the right questions, and decides what action or response makes sense in that moment.

From a design perspective, embedding an LLM requires rethinking app architecture. Developers must consider latency, privacy, security, cost, and fallback behavior. Smart caching, prompt optimization, context management, and selective inference are essential to keep the experience fast and reliable. The best AI-native apps hide this complexity from users, delivering seamless intelligence that feels instant and trustworthy. Over time, as models improve and edge capabilities expand, more reasoning can shift closer to the device — but the core idea remains the same: the app is no longer just a container for features; it is a gateway to intelligence.

Key ideas behind embedding an LLM in a mobile app:

- The LLM acts as a reasoning engine, not just a chatbot

- Intelligence can run in the cloud, on-device, or in hybrid setups

- User context and app data are combined with model reasoning

- Features become adaptive instead of rule-based

- Natural language replaces rigid UI flows

- Automation and decision support become native capabilities

- The app shifts from displaying data to understanding and acting on it

In essence, embedding an LLM means transforming a mobile app from a static piece of software into an intelligent system that understands users, reasons about their needs, and actively helps them achieve their goals.

🚀 Deploy an AI Analyst in Minutes: Connect Any LLM to Any Data Source with Bag of Words

The Fastest Way to Transform Raw Data into Real Business Intelligence — Without Complex Engineering

👉 Learn More⚙️ Common Architectures for LLM-Powered Mobile Apps

☁️ Cloud-Based LLM Architectures

Cloud-based LLM architecture is currently the most widely adopted and fastest-growing approach for embedding AI into mobile applications. In this model, the mobile app acts primarily as an intelligent interface, capturing user input—such as text, voice, images, or contextual signals—and securely transmitting that input to a cloud-hosted LLM via an API. The LLM processes the request using large-scale neural models that would be impossible to run locally on a mobile device, generates a response, and sends it back to the app for display or further action.

However, this approach also introduces trade-offs. Network latency can affect responsiveness, especially in regions with poor connectivity. Operational costs can grow quickly with high usage, and sensitive data must be carefully managed to avoid privacy risks. As a result, cloud-based architectures demand strong backend engineering, caching strategies, rate limiting, and thoughtful prompt design to remain scalable and cost-effective.

Despite these challenges, cloud-based LLMs remain the backbone of most AI-native mobile apps today because they offer unmatched intelligence, flexibility, and speed to market.

Key Points

- Enables access to the most powerful and up-to-date LLMs

- Ideal for complex reasoning, chat, and generative features

- Requires stable internet connectivity

- Higher operational cost at scale

- Strong backend, security, and latency handling are critical

📱 On-Device / Edge LLM Architectures

On-device or edge-based LLM architectures shift intelligence directly onto the user’s device, running smaller, optimized language models locally without relying on constant cloud communication. These models are typically distilled or quantized versions of larger LLMs and are deployed using mobile-optimized frameworks such as Core ML, TensorFlow Lite, ONNX Runtime, or vendor-specific AI accelerators.

As mobile hardware continues to improve—with dedicated AI chips becoming standard—on-device LLMs are rapidly evolving. While they may not fully replace cloud-based models, they are becoming an essential building block for privacy-first and performance-sensitive applications.

Key Points

- Enables offline AI functionality

- Strong privacy and data protection

- Extremely low latency

- Limited reasoning and model capability

- Requires careful optimization for performance and battery life

🔄 Hybrid LLM Architectures

Hybrid architectures combine the strengths of both cloud-based and on-device LLMs, making them the most practical and scalable solution for real-world mobile apps. In this model, simpler tasks—such as intent detection, text classification, quick suggestions, or UI personalization—are handled locally on the device. More complex reasoning tasks—like deep conversations, summarization, planning, or data analysis—are delegated to powerful cloud-based LLMs.

This architecture allows apps to remain responsive, cost-efficient, and privacy-aware while still delivering advanced intelligence when needed. For example, a productivity app might use an on-device model to understand basic commands and only invoke a cloud LLM when the user requests a detailed report or explanation. This selective usage significantly reduces API costs and improves perceived performance.

Hybrid systems also enable graceful degradation. If the network is unavailable, the app can still provide basic AI functionality instead of failing completely. From a user experience perspective, this creates a more reliable and trustworthy product. From a business perspective, it offers better cost control and scalability.

As AI-native apps mature, hybrid architectures are becoming the dominant pattern because they align technical constraints with real-world usage patterns. They represent a thoughtful balance between intelligence, efficiency, and responsibility.

Key Points

- Best balance of performance, cost, and privacy

- Supports offline-first and online-enhanced experiences

- Reduces dependency on constant cloud calls

- Requires more complex system design

- Emerging as the standard for production-grade apps

🔐 Privacy, Security, and Trust Considerations

Embedding LLMs into mobile apps fundamentally changes how user data is processed, analyzed, and stored, making privacy and security non-negotiable concerns. Unlike traditional apps, AI-powered applications often process free-form user input that may include personal thoughts, sensitive information, or business-critical data. This raises the stakes for how data is handled across the entire AI pipeline.

A privacy-first design begins with data minimization—sending only what is absolutely necessary to the LLM. Sensitive identifiers should be anonymized, encrypted, or processed locally whenever possible. All communication between the app and LLM APIs must be encrypted using modern security standards, and access tokens should be rotated and scoped carefully to prevent misuse.

Transparency also plays a crucial role in building trust. Users should understand when they are interacting with AI, what data is being processed, and how it is used. In regulated industries such as healthcare, finance, or education, compliance with standards like HIPAA, GDPR, or SOC 2 is essential. Audit logs, explainability, and human oversight are no longer optional—they are requirements.

Ultimately, trust determines adoption. No matter how powerful an AI feature is, users will abandon it if they feel unsafe. Responsible AI design is not just a legal obligation—it is a product differentiator.

Key Points

- Encrypt all AI-related data flows

- Minimize and anonymize user data

- Ensure transparency in AI usage

- Comply with industry regulations

- Trust directly impacts adoption and retention

🛠️ How Developers Embed LLMs into Mobile Apps

From a development standpoint, embedding LLMs into mobile apps requires a shift in mindset. Developers are no longer just building interfaces and APIs—they are designing intelligent systems. The process typically begins with selecting the right LLM, whether cloud-based, on-device, or hybrid, based on the app’s requirements for intelligence, privacy, and performance.

Prompt design becomes a core engineering task. System instructions, user prompts, and contextual data must be carefully structured to ensure reliable and relevant responses. Conversation state and memory management are critical, especially for apps that support long-running interactions or personalized experiences.

Developers must also integrate LLMs with existing app data and external APIs, allowing the AI to take meaningful actions rather than just generate text. Error handling, latency management, retries, and fallback logic are essential for delivering a smooth user experience.

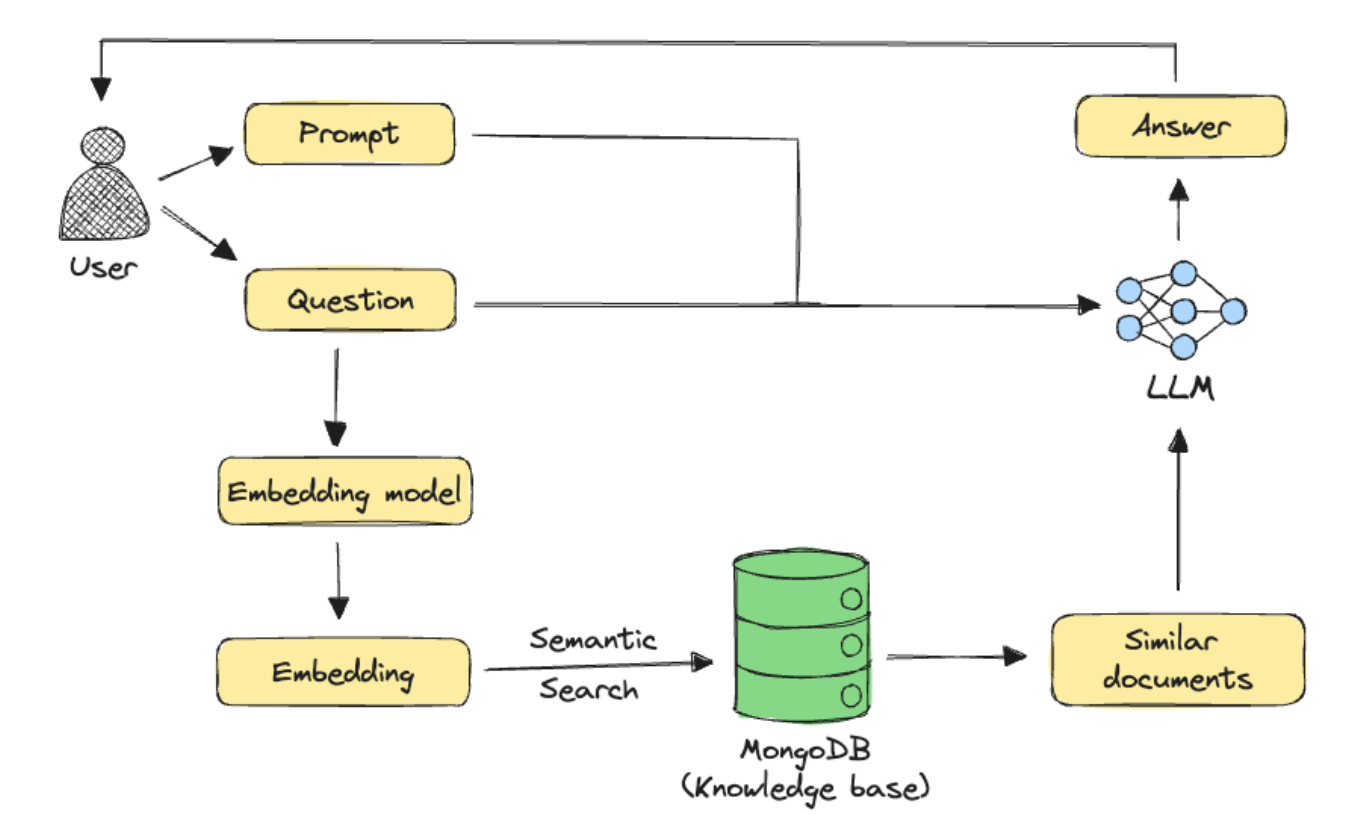

Frameworks like LangChain and LlamaIndex help orchestrate retrieval, reasoning, and tool usage, but they still require thoughtful customization. The challenge is no longer about building screens—it’s about crafting intelligent behavior.

Key Points

- Requires careful model and architecture selection

- Prompt engineering is critical

- Conversation memory must be managed

- AI must integrate with real app data

- Focus shifts to AI experience design

Introduction to Large Language Models

A language model is a machine learning model that aims to predict and generate plausible language. Autocomplete is a language model.

👉 Learn More📈 Business Impact of LLM-Powered Mobile Apps

LLM-powered mobile apps consistently outperform traditional apps in engagement, retention, and perceived value. By enabling natural interaction, these apps reduce friction and make users feel understood. Instead of navigating menus or forms, users simply express intent, leading to longer sessions and deeper engagement.

From a monetization perspective, AI unlocks premium features such as personalized coaching, advanced insights, automation, and enterprise-grade assistance. Startups gain a strong competitive edge by launching differentiated AI-native experiences, while enterprises use LLMs to modernize legacy systems and improve customer satisfaction.

Importantly, the return on investment is not limited to cost savings or efficiency. AI-driven apps build emotional connection, trust, and loyalty—factors that directly influence lifetime value. In many cases, the success of an app is no longer defined by features alone, but by how intelligently it responds to users.

Key Points

- Higher engagement and retention

- Enables premium monetization models

- Differentiates products in competitive markets

- Improves customer satisfaction

- ROI extends beyond technical metrics

🔮 The Future: AI as a Native Mobile Layer

As we move forward, LLMs will increasingly feel like a native layer of mobile apps and operating systems rather than external integrations. AI agents will proactively assist users, anticipate needs, and coordinate actions across apps and services. Instead of reacting to commands, apps will understand intent and context continuously.

This shift will redefine how users interact with software. Mobile apps will evolve from isolated tools into collaborative partners that help users think, decide, and act. Developers who embed LLMs today are not just adding new features—they are preparing for a fundamentally different interaction model.

The future of mobile is not about more screens or buttons. It is about intelligence woven seamlessly into everyday experiences.

Key Points

- AI becomes a core system layer

- Proactive and context-aware assistance

- Cross-app coordination through agents

- Apps evolve into collaborators

- Early adoption prepares teams for the future

❓ Frequently Asked Questions (FAQ)

Not always. While cloud-based LLMs require an active internet connection, many modern apps use hybrid architectures where basic AI tasks run on-device and advanced reasoning is handled in the cloud. This allows apps to offer limited AI functionality even when offline and switch to more powerful capabilities once connectivity is available.

On-device LLMs are optimized for speed, privacy, and efficiency, but they are not as powerful as large cloud-hosted models. They work best for lightweight tasks such as intent detection, simple summarization, and quick suggestions. For complex reasoning, long conversations, or multi-step analysis, cloud-based LLMs are still necessary.

Cost control is managed through hybrid architectures, prompt optimization, caching frequent responses, limiting token usage, and triggering cloud calls only when necessary. Many teams also use rate limiting, usage analytics, and fallback logic to ensure AI features remain scalable and financially sustainable.

Yes—if the app is designed responsibly. Best practices include encrypting all data in transit, minimizing the information sent to LLM APIs, anonymizing user identifiers, and processing sensitive data on-device when possible. Transparency and compliance with regulations such as GDPR or HIPAA are essential for building user trust.

Beyond traditional mobile development skills, developers need to understand prompt engineering, AI system design, API orchestration, context management, and error handling. Designing a good AI experience is as important as building a polished UI, making AI literacy a core skill for modern app developers.