🧠 Retrieval-Augmented Generation (RAG)

“When AI meets real-time knowledge, intelligence becomes insight.”

As Artificial Intelligence becomes more integrated into our daily work — from chatbots to business analytics tools — one major challenge remains: how to make AI truly accurate and up-to-date.

Traditional language models (like GPT, Gemini, or Claude) are powerful, but they have a limitation — their knowledge is frozen at the time they were trained.

That’s where Retrieval-Augmented Generation (RAG) changes everything.

RAG combines the power of large language models (LLMs) with real-time information retrieval, giving AI access to fresh, verified data instead of relying solely on what it already knows.

It’s like turning a textbook-trained student into one who can browse, read, and reason — all at once.

🧩 What Is Retrieval-Augmented Generation ?

RAG is a hybrid AI framework that improves the accuracy, relevance, and transparency of generative models.

It works in two key stages:

| Stage | Description | Example |

|---|---|---|

| 1️⃣ Retrieval | The AI fetches relevant information from a knowledge base, documents, or web sources. | Searching internal company data or public APIs for the latest reports. |

| 2️⃣Generation | The AI uses that retrieved information to generate a contextually accurate and fact-based answer. | Summarizing those reports into a clear, human-readable explanation. |

Traditional AI guesses; RAG looks up and answers.

RAG.

🎓 Why RAG Is a Game-Changer for Students, Clients, and Businesses

👩🎓 For Students

- RAG-powered study assistants can access the latest research papers, textbooks, and online articles to generate well-informed summaries or project ideas.

- Instead of generic answers, students get referenced and updated responses based on credible data.

- Tools like ChatGPT + RAG + Vector Databases (like Pinecone or FAISS) help students build personal AI research bots.

💼 For Businesses

- RAG improves enterprise chatbots, knowledge assistants, and internal search systems by connecting them to company databases.

- AI agents can pull real-time policy documents, FAQs, or CRM data to provide personalized support.

- It reduces hallucinations (false AI responses) and builds trust by showing sources.

🧑💻 For Developers and Clients

- RAG enables businesses to create domain-specific AI models without retraining entire LLMs.

- It allows teams to plug in custom data pipelines — from PDFs to SQL databases — making AI more relevant and cost-efficient.

- Clients using RAG see a 70–80% drop in misinformation and improved automation accuracy.

🤖 AI Chatbots Are Evolving Into Autonomous AI Assistants

In 2025 and beyond, AI assistants are no longer just answering questions — they’re managing workflows, analyzing data, and making decisions that improve business efficiency, learning outcomes, and personal productivity.

👉 Learn More⚙️ How RAG Works: Step-by-Step

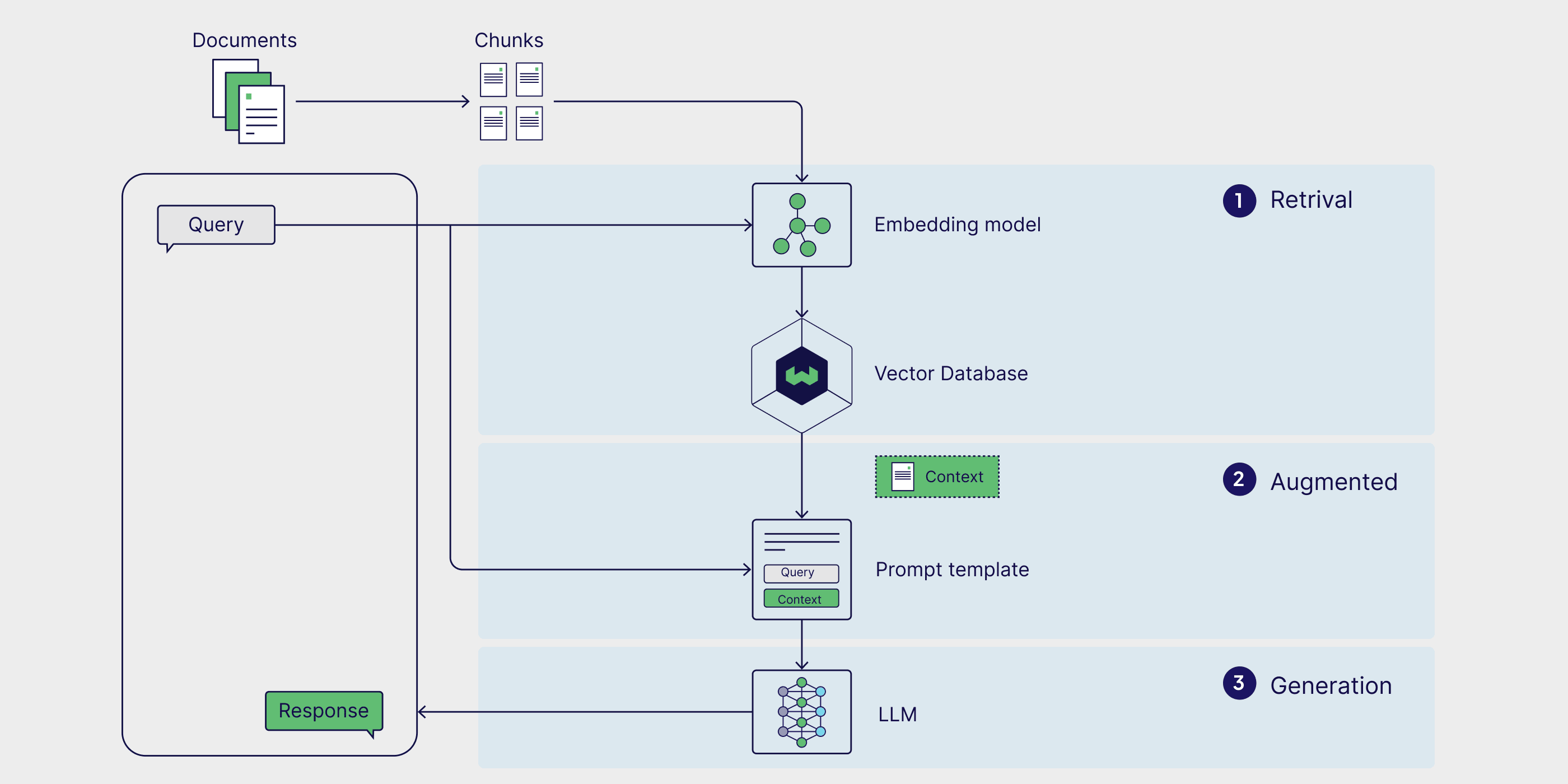

Here’s how a RAG-based AI workflow operates:

| Step | Description | Example |

|---|---|---|

| 1️⃣ Query Input | The user asks a question. | “What were our top sales regions in 2024?” |

| 2️⃣ Document Retrieval | The system fetches relevant data from company reports or APIs. | Pulls info from MongoDB or Excel sheets. |

| 3️⃣ Context Injection | The retrieved data is combined with the user’s query. | AI adds relevant stats to the prompt. |

| 4️⃣ Answer Generation | The model generates a clear, human-like response with citations. | “Your top regions were California, Texas, and Florida — sales up by 24%.” |

This makes RAG explainable, auditable, and highly practical for real-world decision-making.

🏢 How Companies Are Using RAG in 2025

In 2025, Retrieval-Augmented Generation (RAG) has evolved from an experimental concept into a foundational component of enterprise AI systems. Leading technology companies are embedding RAG frameworks into their ecosystems to improve accuracy, transparency, and personalization — reshaping how data-driven organizations operate.

Microsoft Copilot, for example, integrates RAG to fetch real-time organizational data from SharePoint, Teams, and Outlook. This allows employees to ask natural questions like “Summarize last week’s project updates” or “Find the latest marketing plan from the design team” — and receive immediate, data-backed responses. The result is higher workplace productivity, fewer manual searches, and more reliable decision-making.

OpenAI, through ChatGPT Enterprise, has implemented private RAG pipelines for corporate clients. These systems retrieve secure, company-specific data while maintaining full privacy and compliance. Instead of giving generic answers, ChatGPT Enterprise now provides responses grounded in verified organizational knowledge, making it a trusted companion for business strategy, analytics, and client support.

Google Cloud AI has also taken major strides by embedding RAG for enterprise knowledge management. Its system retrieves data from internal repositories and knowledge bases before generating an answer. This greatly reduces the problem of AI “hallucination” — where models make up information — and significantly increases user trust in AI-generated content.

At Mystic Matrix Technologies, RAG sits at the heart of innovation. The company is developing advanced AI dashboards and autonomous assistants powered by vector databases and frameworks like LlamaIndex. These solutions enable clients and students to interact with data in real-time, asking complex questions and receiving accurate, contextually aware responses. Whether it’s a university analyzing student performance or a business monitoring workflow efficiency, Mystic Matrix’s RAG-powered systems deliver reliable insights that drive smarter decisions.

🌐 How to Implement RAG in Your Own Workflow

You don’t need enterprise-grade infrastructure to start working with RAG — even students, freelancers, or small startups can integrate it using open-source tools and cloud services.

Here’s how the typical RAG setup works:

The Language Model (LLM) — such as GPT-4, Claude, or Llama 3 — handles the text generation and reasoning.

A Vector Database — like Pinecone, FAISS, or Weaviate — stores data embeddings, allowing the system to retrieve semantically relevant documents.

A Document Loader — typically LangChain or LlamaIndex — connects your text files, PDFs, or APIs into the AI’s retrieval pipeline.

Finally, the RAG Framework — often implemented via LangChain’s RAG pipeline — orchestrates the process, ensuring that retrieval and generation work together seamlessly.

This architecture allows users to build retrieval-enhanced assistants, research bots, or analytics tools that provide factually grounded, context-aware answers.

For example, here’s a simple Python implementation using LangChain:

from langchain.llms import OpenAI

from langchain.chains import RetrievalQA

from langchain.vectorstores import FAISS

from langchain.embeddings import OpenAIEmbeddings

# Step 1: Create an embedding store

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_texts(["AI is transforming business processes"], embeddings)

# Step 2: Build RAG Chain

llm = OpenAI(model="gpt-4")

qa = RetrievalQA.from_chain_type(llm, retriever=vectorstore.as_retriever())

# Step 3: Ask AI

response = qa.run("How is AI changing industries?")

print(response)

💡 Students and developers can easily experiment with this setup in Google Colab or VS Code to see RAG in action — building mini “intelligent assistants” that can reference custom datasets.

🧭 How RAG Can Be Used in Daily Life

RAG isn’t just for tech giants — it’s for everyone who interacts with data.

- 📚 For Students: Create your own RAG-based study assistant that references textbooks, notes, and PDFs. You can ask it questions like “Summarize chapter 5 of my AI notes” or “Compare reinforcement learning with supervised learning.”

- 💼 For Professionals: Build internal RAG dashboards that retrieve live data from company systems — CRM, sales records, HR logs — and summarize them into actionable insights.

- 🏠 For Individuals: Use RAG to manage your personal knowledge — have an AI summarize saved articles, notes, or research ideas.

- 🧑🏫 For Educators: Deploy FAQ bots powered by RAG to fetch course-related answers dynamically, making learning support available 24/7.

In essence, RAG merges the factual accuracy of Google Search with the conversational intelligence of ChatGPT — giving users the best of both worlds.

🌟 Final Thought

Retrieval-Augmented Generation isn’t just an upgrade — it’s a revolution in how AI thinks, reasons, and interacts with knowledge.

From enterprise workflows to classrooms and startups, RAG is empowering a new era of trustworthy, verifiable, and context-driven AI systems.

At Mystic Matrix Technologies, we believe that the future of AI lies not in replacing human intelligence — but in augmenting it with real-time information and responsible reasoning.

“RAG transforms AI from guessing to knowing — and from answering to understanding.”

❓ Frequently Asked Questions (FAQ)

Regular AI chatbots generate answers from pre-trained data, while RAG retrieves real-time information from knowledge sources — resulting in up-to-date and fact-checked answers.

Not necessarily. Platforms like LangChain, LlamaIndex, and ChatGPT Enterprise provide low-code RAG tools for beginners and businesses.

RAG helps create accurate, reliable, and customized AI assistants that understand internal business data securely.

Yes! Students can use RAG to summarize research papers, build AI tutors, or automate study notes — all using open-source libraries.

Absolutely. RAG is paving the way for “grounded intelligence” — where AI combines real-world retrieval with human-like reasoning to produce trusted, verifiable results.